The robots.txt file is an essential tool for webmasters, allowing them to control how search engine crawlers and other automated bots interact with their websites. The message “URL blocked by robots.txt” in Google Search Console signifies that certain URLs are inaccessible to crawlers due to directives in this file. This article will delve into various methods of blocking URLs, including specific examples, and discuss how to block AI bots and large language models (LLMs) effectively.

Understanding robots.txt

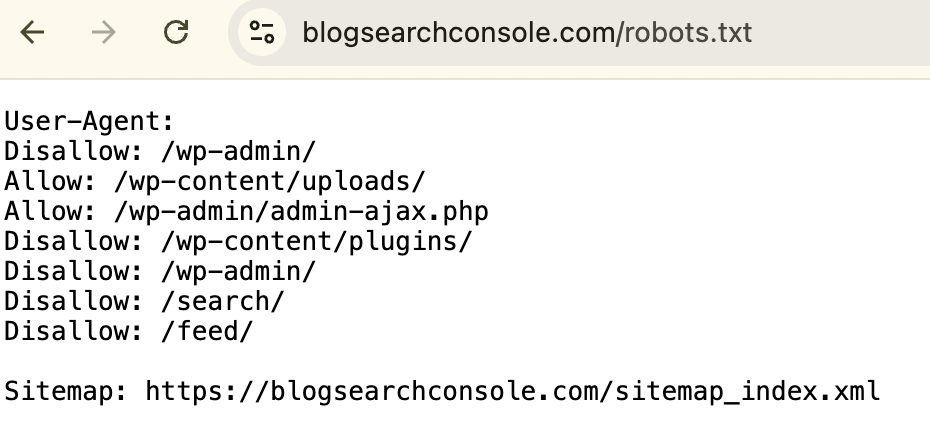

The robots.txt file is a plain text document placed in the root directory of a website. It instructs crawlers on which parts of the site they can access and which they should ignore. The two primary directives used in this file are User-agent and Disallow.

– User-agent specifies which crawler the rules apply to, and can be set to a specific bot or use an asterisk (*) to apply to all bots.

– Disallow indicates which URLs should not be crawled.

Examples of blocking URLs

Block all crawling

To prevent all bots from accessing any part of your site, use:

User-agent: *

Disallow: /

Block specific pages

To block a particular page for all bots, such as “private.html”:

User-agent: *

Disallow: /private.html

Block specific directories

To block an entire directory for all bots, such as “admin”:

User-agent: *

Disallow: /admin/

Block specific file types

To prevent indexing of certain file types for all bots, like PDFs:

User-agent: *

Disallow: /*.pdf$

Block URLs with query strings

To block URLs containing specific parameters for all bots:

User-agent: *

Disallow: /*?*

Allow specific pages within blocked directories

If you want to block an entire directory for all bots but allow access to one specific page:

User-agent: *

Disallow: /private/

Allow: /private/allowed-page.html

Block Specific Bots

You can also target specific bots by name:

User-agent: Googlebot

Disallow: /no-google/

User-agent: Bingbot

Disallow: /

Blocking AI bots and LLMs

As AI technologies evolve, many webmasters are concerned about unauthorized scraping of their content by AI bots and LLMs like GPTBot. Here are effective methods to block these entities:

Blocking specific AI bots

To prevent GPTBot from accessing your site, add the following lines to your robots.txt file:

User-agent: GPTBot

Disallow: /

Blocking multiple AI bots

If you want to block several known AI bots, you can specify each one of them:

User-agent: OAI-SearchBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: Scrapy

Disallow: /

General blocking for AI scrapers

To ensure that all AI bots are restricted, you might use a wildcard approach (but that would also block non-AI bots such as Google Bots):

User-agent: *

Disallow: /

Limitations of robots.txt

While the robots.txt file is a powerful tool for controlling crawler access, it is important to note that compliance is voluntary for most bots. Malicious bots may ignore these directives entirely. Therefore, while using robots.txt can help manage legitimate crawler traffic, it may not be foolproof against all scraping attempts.

Responding to “Blocked by robots.txt” in Google Search Console

When you receive a notification about URLs being blocked by robots.txt in Google Search Console:

Verify the blockage

Use the URL Inspection tool to confirm that the URL is indeed blocked.

Assess Intent

Determine if the blockage was intentional or accidental.

Modify robots.txt if necessary

If you want the page indexed, update your robots.txt file accordingly.

Request recrawl

After making changes, use the URL Inspection tool again to request indexing.

Track common issues

Use tools that enable you to monitor and manage similar errors – build history of all actions taken on each URL address. Try Revamper11.

Managing your robots.txt file effectively is crucial for SEO and content protection strategies. By understanding how to block various URLs and specific bots – especially emerging AI technologies – you can maintain control over your website’s accessibility while optimizing its visibility in search results. Taking care about proper rules in robots.txt helps you take care of your crawl budget.

Citations:

https://developers.google.com/search/docs/crawling-indexing/robots/intro?hl=pl

https://ignitevisibility.com/the-newbies-guide-to-blocking-content-with-robots-txt/

https://www.krasamo.com/block-gptbot